![4. Spark SQL and DataFrames: Introduction to Built-in Data Sources - Learning Spark, 2nd Edition [Book] 4. Spark SQL and DataFrames: Introduction to Built-in Data Sources - Learning Spark, 2nd Edition [Book]](https://www.oreilly.com/api/v2/epubs/9781492050032/files/assets/lesp_0401.png)

4. Spark SQL and DataFrames: Introduction to Built-in Data Sources - Learning Spark, 2nd Edition [Book]

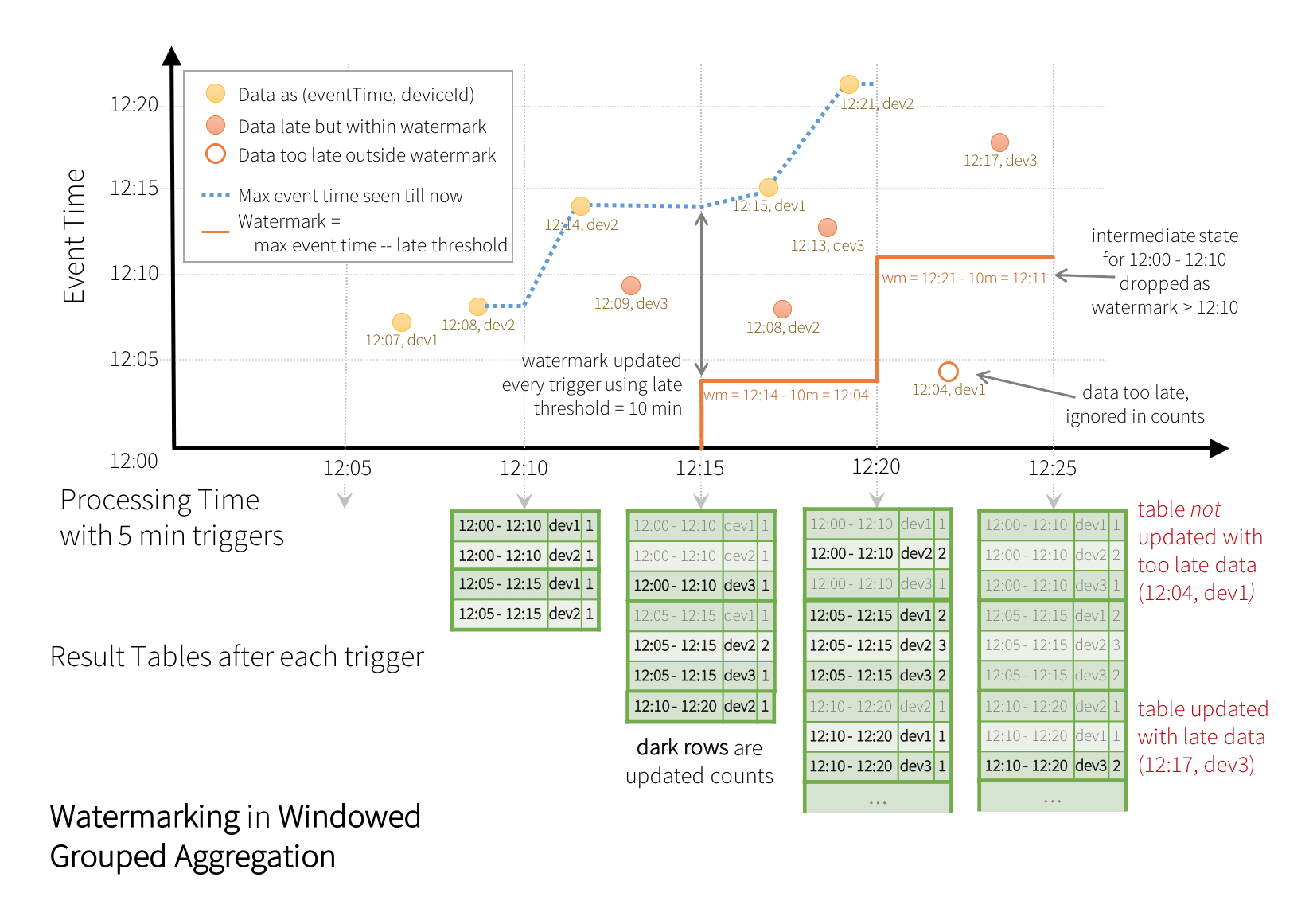

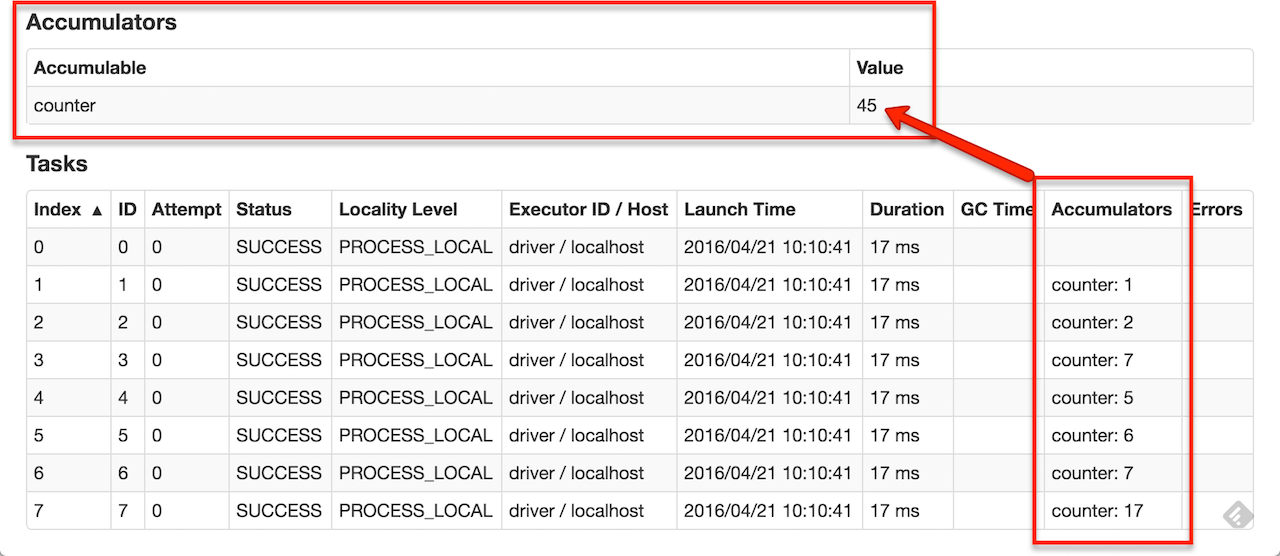

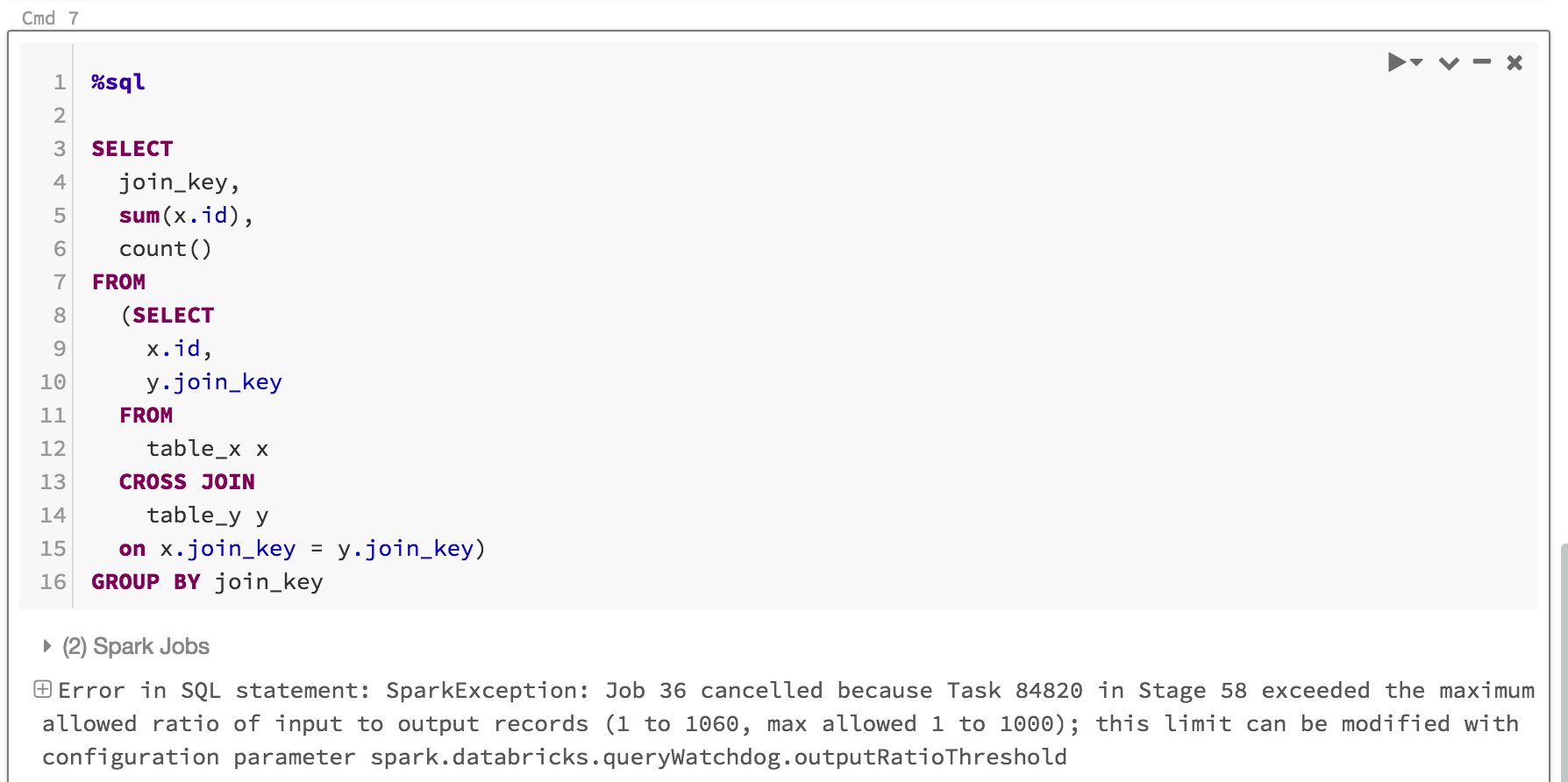

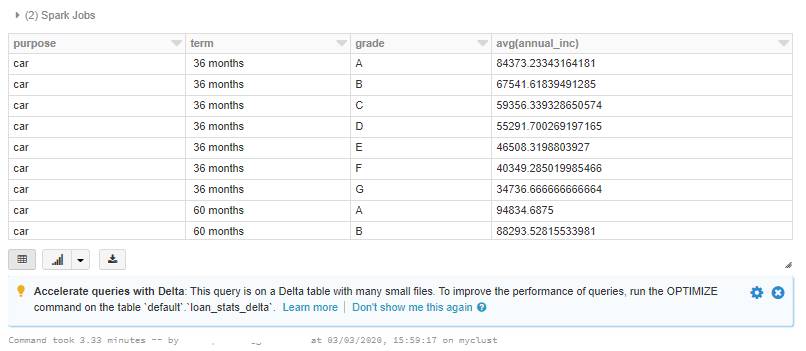

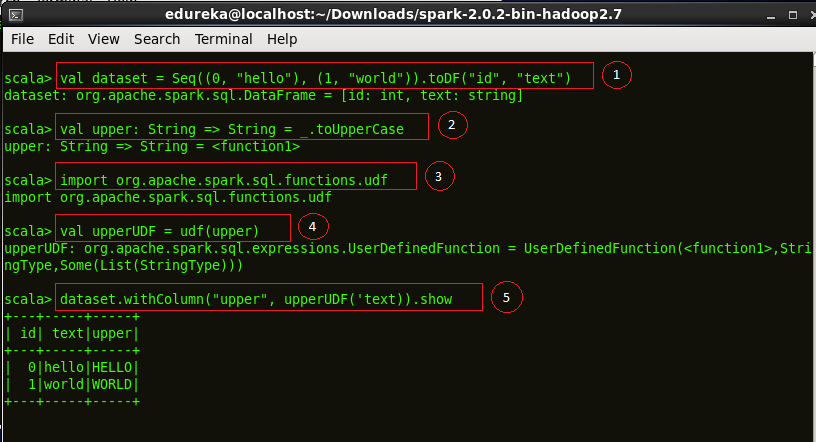

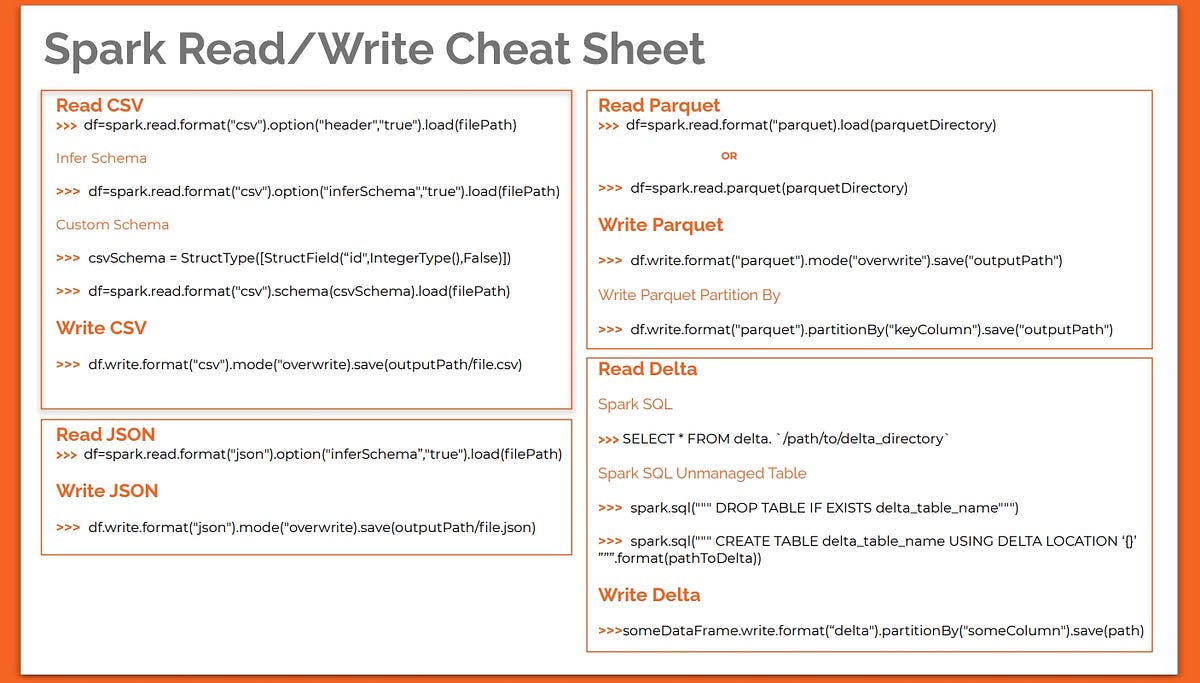

SQL at Scale with Apache Spark SQL and DataFrames — Concepts, Architecture and Examples | by Dipanjan (DJ) Sarkar | Towards Data Science

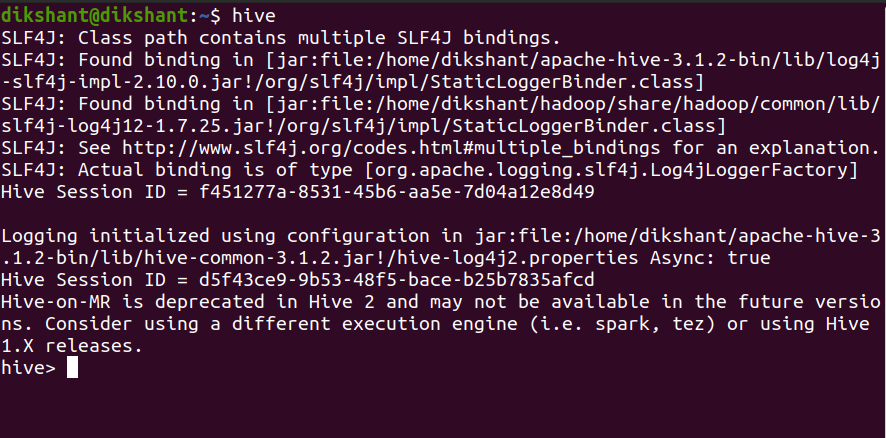

How to UPSERT data into a relational database using Apache Spark: Part 1(Python Version) | by Thomas Thomas | Medium

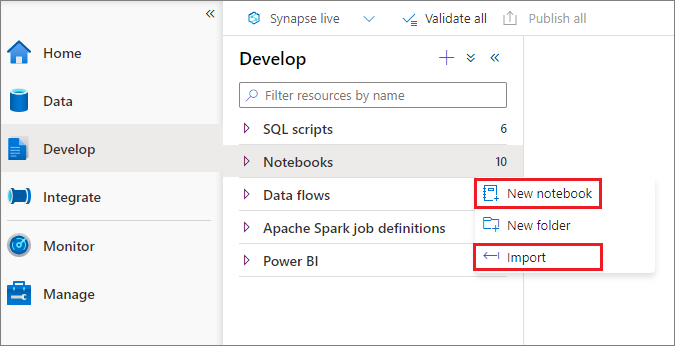

Kickstart your Apache Spark learning in Azure Synapse with immediately available samples - Microsoft Community Hub